The widely repeated 'MIT report says 95% of enterprise AI pilots fail' headline misses the point entirely. It’s not nearly that gloomy, and while companies are finding AI challenging, it’s not because AI doesn't work – it’s because they're approaching it the wrong way. The same report says that general-purpose LLM pilots succeed 83% of the time while enterprise AI tools succeed only 25% of the time, far better than 5%, albeit with substantial room for improvement.

The good news: with the right strategy, training, and focus, companies can move quickly up the curve, leveraging AI in ways that not only move the needle, but are genuinely transformative.

As a starting point, given the '95% failure' headline continues to get attention, it’s worth exploring the underlying data with a different frame: General-purpose LLM (GPLLM) pilots succeed 83% of the time, while rigid enterprise AI tools succeed only 25% of the time - even using the study's conservative definition of success, meaningful KPI improvement within just 6 months of pilot completion. This aligns with other research showing both widespread experimentation and promising but limited value capture. The more useful takeaway is that you just need the right strategy.

So, why do so many Enterprise AI pilots appear to fail?

There are many use-cases, but we’ll focus on a few fundamental categories here: using AI to improve existing processes, like a customer-service chatbot or supply-chain optimization, and using AI for personal productivity, like responding to emails and writing reports.

Enterprise AI tools fail for a straightforward reason: they're often selected by central teams based on AI capabilities rather than business fit and workflow realities. The result? Rigid and often siloed systems that don't integrate into existing workflows, require manual data migration, and can't learn from feedback, requiring constant correction for the same mistakes.

At the same time, 90% of companies report ‘at least some’ employees are already using flexible ‘off-the-shelf’ tools like ChatGPT for the latter, personal productivity, many using their personal accounts, demonstrating tangible and readily accessible value.

What do successful teams do?

1. Drive effective use of General Purpose LLMs (GPLLMs):

Successful companies exploit the opportunity to use GPLLMs to increase productivity. Many companies roll out a capability like Microsoft Copilot, with the implicit assumption that the hard part is done. Unfortunately, most employees try it, shrug their shoulders because it didn’t do what they wanted, and move on. Successful companies recognize that this is a change management project. They not only roll out a capability like Copilot, they pro-actively engage and train teams and jointly develop the use-cases and identify the benefits.

This is a critical step. Front-line teams understand their day-to-day jobs better than anyone. It’s not enough to do some online training, many people don’t want to ‘read the manual’. You have to demonstrate how to use these tools, where they can be used, and then workshop use-cases. Get teams engaged in brainstorming how they can get benefit, and then actually putting them to work. Once you capture their imagination and they “get” it, you’re halfway there.

Two highly effective approaches are: hands-on workshops, and AI hackathons, targeting users and developers, respectively.

Workshops are effective because they're hands-on: you bring a team together with their actual workflow problems and spend a few hours having them use ChatGPT or Claude to solve real work. They leave having accomplished something tangible, not with a manual to read later. This type of focused experiential learning helps them move far enough up the learning curve to intuitively understand how AI can create value for them, which goes a long way toward ensuring they continue using it afterward.

Hackathons are hands-on learning for developers. The focused preparation and dedicated time to build with AI give individuals and teams the momentum they need to experiment, learn, and succeed. These events often create a meaningful mindset shift, prompting employees to seek out AI-driven opportunities in everything they do.

2. Deploy Enterprise AI strategically

Successful companies think very practically about enterprise AI, focusing on workflow integration, adaptability, and learning. It’s both easy and tempting to instead go down the distracting rabbit-hole of complexity, identifying evaluation criteria and scoring, identifying a seemingly endless and ambitious set of use-cases, and running pilots.

Successful companies get started quickly, engaging lines of business to target problems that are actionable and realistic opportunities for AI. There are a few important factors to consider here: that the use is practical within existing processes and tools; it’s something the team can engage with and get behind; the effort and ROI are appropriate; and it will generate visibility in the broader organization (it has impact, will generate interest).

In terms of technology approach, focus on adaptable tools with three key characteristics: 1) they can integrate into existing workflows, tools, and processes; 2) they are easily adaptable as needs change; and 3) they learn.

Any solution that requires copying or moving data between systems or requires changing a process without any discernible benefit should be avoided. And any system that has to be repeatedly corrected, exhibiting the same misunderstandings or repeating the same mistakes over and over, isn’t going to create value.

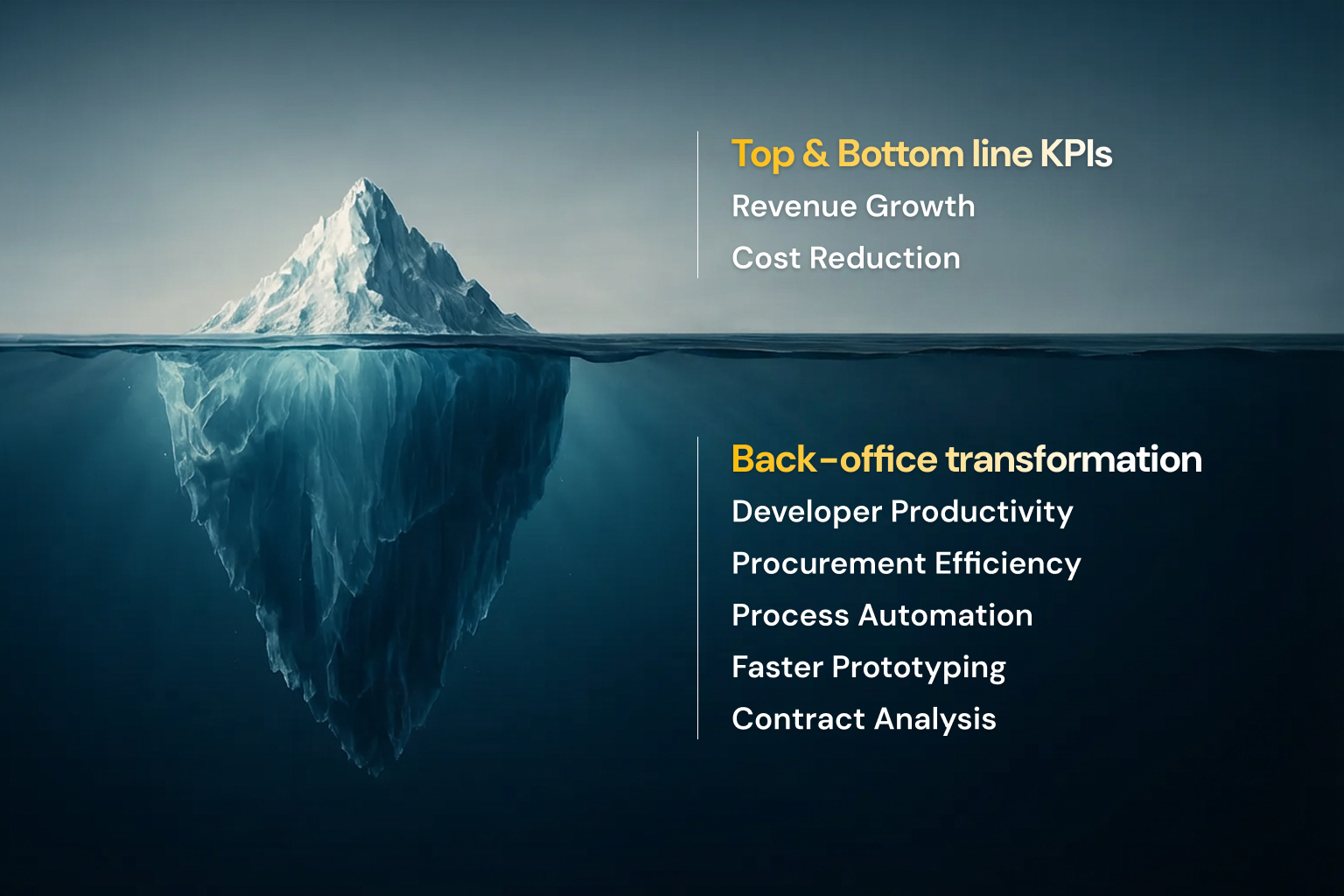

And don’t just chase top- and bottom-line metrics. C-suites and boards are naturally top-line and bottom-line oriented. This is ultimately what matters. That leads to a natural emphasis on AI adoption for top-line revenue-generation, and bottom-line expense reduction. These are directly measurable and easy to “report up”. There are many opportunities here, from customer-service chatbots, to high-quality content generation to automated reporting and analysis.

This however misses a huge opportunity for AI to improve, and even re-invent, back-office processes. These aren’t easily measurable in dollars. For example, developer tools to increase coding efficiency may immediately offset hiring needs, creating a measurable bottom-line impact, but using those tools to more quickly prototype concepts for customer validation creates benefits that are difficult to measure. Ultimately it should translate into faster time to market, competitive advantage, and eventually, revenue, but that is measurable in years, not months. The same is true of reducing the time to negotiate procurement contracts.

That doesn’t mean these aren’t valuable, but they require the conviction and commitment to improving the way the business operates: that these improvements result in a faster, more nimble and responsive business.

3. Reinvent the process

This is the really, really, big opportunity: rethinking the process, and the objective.

The sooner you build this organizational AI expertise, the sooner you can start thinking more expansively about how AI can transform your business. What can you do differently because of AI - previous waves of technology innovation gave rise to entirely new businesses. The internet didn’t just enable faster communication using email, it enabled streaming video, and cloud computing. Cloud computing in turn enabled a raft of startups to launch and scale without large capital expenses. The iPhone and the App Store enabled ride-sharing. E-commerce enabled direct-to-consumer brands to launch without ever engaging a retailer.

The contrast here is significant. On the left, AI tools accelerate individual steps but remain disconnected. Photo assessment AI generates an estimate, but without context from police reports or telematics, accuracy suffers, leading to the familiar "supplement loop" where body shops request corrections. Humans still coordinate end-to-end.

On the right, an agentic system orchestrates the entire workflow. It ingests multiple data sources simultaneously, cross-validates findings (the photo shows rear damage; the police report says "rear-ended"), and assigns confidence scores. High-confidence claims resolve automatically. Complex cases route to human experts whose decisions feed back into the system, improving future accuracy. The adjuster's role shifts from processing to judgment.

Implementation strategy and principles:

Get started. Aggressively.

Your competitors already have. A recent study measured the direct revenue impact of deploying AI chatbots in an e-commerce business: a 16% increase in the number of completed transactions. Chatbots lowered the friction to a sale – in competitive markets, that growth comes at a competitor’s expense. This also illustrates the corresponding danger of waiting. You can either capture market share, or lose it.

As you implement, keep these technical principles in mind:

- Start with MVPs – Don't build everything at once. Deliver value in the fastest possible timeframe, then iterate.

- Use the right tool for the job – Code is deterministic; use it where precision matters. Use AI for creative, analytical, and non-deterministic work. Hybrid approaches often work best.

- Build learning systems – Capture human-in-the-loop feedback. Systems should improve over time, not repeat the same mistakes.

- Design for integration – Tools that require leaving existing workflows won't get adopted. Build or buy for seamless integration.

Don’t forget about security, regulation, and organizational constraints

Everything we’ve talked about so far concerns the use-case and value creation, but there are other constraints that are essential to consider. We’ll touch on three big categories here:

1. Data security and privacy

There are a number of issues here, chief among them is that sending proprietary data to external LLMs creates data exposure risk, including leaking competitive intelligence, customer data, and proprietary IP. This means deciding what can be done safely now, classifying data sensitivity, and using public LLMs, private instances, and specialized capabilities according to the degree of control needed. Private instances of LLMs are inherently no less secure than enterprise cloud deployments, but they are less mature, so do use care. If in doubt, start by piloting with synthetic or anonymized data - you don’t necessarily need to wait.

Another security concern, especially in regulated industries like financial services and healthcare, is that AI may create compliance risk or liability issues, and might wreak havoc left unchecked. So, limit the blast radius, start with use cases where errors are low-consequence, like internal research, or generating draft documents, before tackling high-stakes decisions like customer commitments or compliance determinations. Build confidence in and understanding of the capabilities and risks, starting with internal use-cases, incorporate human-in-the-loop models, implement audit trails, and understand agentic security methods and vulnerabilities.

2. Organizational Resistance and Change Management

We briefly touched on this earlier, one of the biggest problems in AI adoption today is that employees simply aren’t adopting it. While 90% of enterprises report some AI usage, adoption within most organizations remains limited to a small fraction of employees, so adoption is still very limited. A related challenge is “change fatigue”, the sentiment that “we've tried digital transformation before and it failed, we’ll just wait this out”.

The keys here, as mentioned in section 1, are hands-on practical training, through workshops and hackathons, and directly engaging front-line managers. In particular, work with managers to develop lighthouse use-cases: when employees see peers getting real value, adoption accelerates naturally. Forcing tools on resistant teams can backfire.

3. Vendor Lock-In, and Technology Risk

Committing to a platform or vendor that becomes obsolete, or is acquired and changes focus, is a very real risk. This makes relying on platform vendors to roll out AI capabilities, or buying complete new platforms, risky. Systems that layer onto existing workflows are more easily swapped and lower risk than platforms requiring wholesale replacement.

None of these constraints is insurmountable. Don’t wait for perfect conditions, falling behind competitors who are building expertise now. Develop a practical approach, matching your approach with your actual constraints.

A Practical Roadmap

The framework above describes what successful AI adoption looks like – the three phases companies need to move through as they build capability. But how do you actually execute this? Here's a practical roadmap for getting started.

1. Commit

The first step is also the most important, committing to actual implementation, not more analysis. You may, and probably will, change course along the way, and that's fine, but without a genuine commitment to impact your business, not just study it, you'll end up in an endless cycle of evaluation.

If you already have the necessary clarity and expertise internally, by all means move forward on your own. If you don't, find someone who can help you maintain focus and avoid pitfalls that could delay progress by months.

2. Analyze

Look at your business and identify workflows where AI could be useful. Focus on areas where you currently have friction. For example, if you're spending too much time coordinating with contract development teams, dealing with misunderstandings and scope changes, that's an excellent place for AI to help - both in creating better specs and automating routine development tasks.

Another example: your customers are dissatisfied with your automated support, unable to find solutions or reach an agent. Or your marketing team can't maintain content quality for new product launches because of the sheer volume required. These are the kinds of problems where AI can create tangible value relatively quickly.

As your organizational AI capabilities increase, you'll be in a much stronger position to rethink and reinvent entire workflows. But that's overly ambitious if you haven't already developed the necessary competencies.

3. Prioritize

Prioritization requires balancing multiple factors. Can you implement this within existing processes and tools, or does it require wholesale changes? Will the team engage with this, or will they resist? Is the scope ambitious enough to generate visibility and interest, but realistic enough to actually deliver? Can you execute within available resources and a reasonable timeframe? Will this generate measurable ROI?

Depending on your circumstances, you may find additional considerations, but these are the most fundamental.

4. Implement

Start building, and don't overanalyze. You'll learn along the way and adjust course. Don't expect everything to go smoothly, but stay focused on your objectives.

Evaluate whether you have the skills and knowledge to do this on your own. If you don't, find an implementation partner who can help you execute while upskilling your team.

5. Measure and correct

You will have successes and failures, so make sure you understand what works and adjust course accordingly. Choose KPIs to measure both progress and impact. For example, if you're fixing a support process, are your customer satisfaction scores increasing? Do you understand why? Are you A/B testing, and can you discern incremental impacts? What can you learn that improves your ROI over time? You need a strategy not just to implement, but to improve.

6. Repeat

This is a cycle. We're at the very beginning of AI adoption. You'll find many ways to utilize AI, especially as you move toward reinventing workflows. The technology is moving quickly too. New use cases will emerge, things that aren't yet possible will become possible, new approaches and infrastructure will be required. Your organization will learn and improve its ability to utilize AI technologies, so don't build for perfection - focus on getting your MVPs into production as quickly as possible.

Get started

The companies that will dominate their markets in 2-3 years are building AI expertise today. They're not waiting for perfect solutions, complete roadmaps, or regulatory clarity - they're learning by doing, guided by clear principles and practical focus.

The advantage compounds. Early movers build expertise that enables them to capture value faster, opening a gap that isn’t easily recovered. That value funds further investment in capability and talent, creating a flywheel effect. Meanwhile, competitors who wait find themselves not just behind on technology, but behind on organizational learning, market position, and the financial resources to catch up.

This is not incremental improvement - it's a fundamental shift in how work gets done and value gets created. The internet didn't just make existing businesses faster; it enabled entirely new business models that didn't exist before. AI is following the same pattern. The question isn't whether this transformation will happen. The question is whether you'll lead it or be disrupted by it.

Start now. Build expertise with general-purpose tools. Deploy enterprise AI strategically where it fits your workflows. And begin reimagining what's possible when AI enables you to rethink entire processes, not just automate steps.

The gap between leaders and laggards is already growing. Which side is your company on?

About the Authors

Bill Queen helps leadership teams see the strategic connections others miss - how market dynamics, technology shifts, and competitive positioning intersect to create opportunities before they become obvious. He advises on AI strategy from a business perspective: which opportunities to pursue, how to build organizational capability, and how to create competitive advantage and sustainable value propositions. He has led initiatives from developing new products and categories to executing strategic acquisitions at companies including Keysight Technologies, CSR/Zoran, and HP. Prior to technology, he was lead guitarist and songwriter in PKRB, an original rock band - a crash course in building something from nothing and iterating fast. He holds an MBA from MIT Sloan. [Read more strategic analysis at billqueen.substack.com]

Connect: Bill Queen (LinkedIn)

Dr. Ofer Hermoni is an entrepreneur, AI strategist, and advisor to global enterprises on AI adoption, innovation, and organizational transformation. He is the Founder of iForAI, a consultancy focused on AI workforce upskilling, AI strategy, and hands-on AI implementation for mid-market and Fortune 500 organizations. Ofer is the co-founder and former elected Chair of the Linux Foundation AI & Data, and an active contributor to NIST’s AI Safety Institute Consortium. With a PhD in computer science and more than 60 patents in AI, security, and networking, he helps companies build practical, high-impact AI capabilities that scale. [Read more at medium.com/@oferher]

Connect: Dr. Ofer Hermoni (LinkedIn)

Dr. Ofer Hermoni

Founder & Chief AI Officer

Bill Queen

Advisor, Silicon Catalyst